The Art Of SERP Sniffing

SERP Sniffing is a technique that has been used by a number of thin affiliates, blackhats and spammers to identify profitable long tail keywords to optimise for. Typically this technique charts thousands of easy pickings across the SERPs to bring in long term, scaleable traffic. I would like to explore this technique, and demonstrate how it works, especially since I tried it myself to prove that it can and does work.

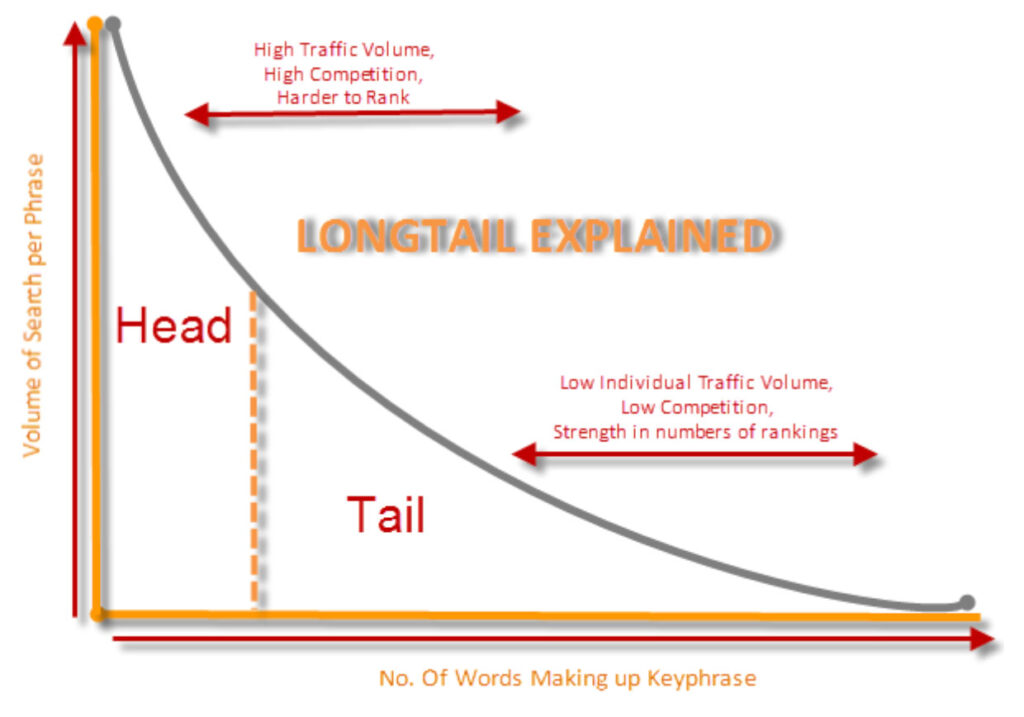

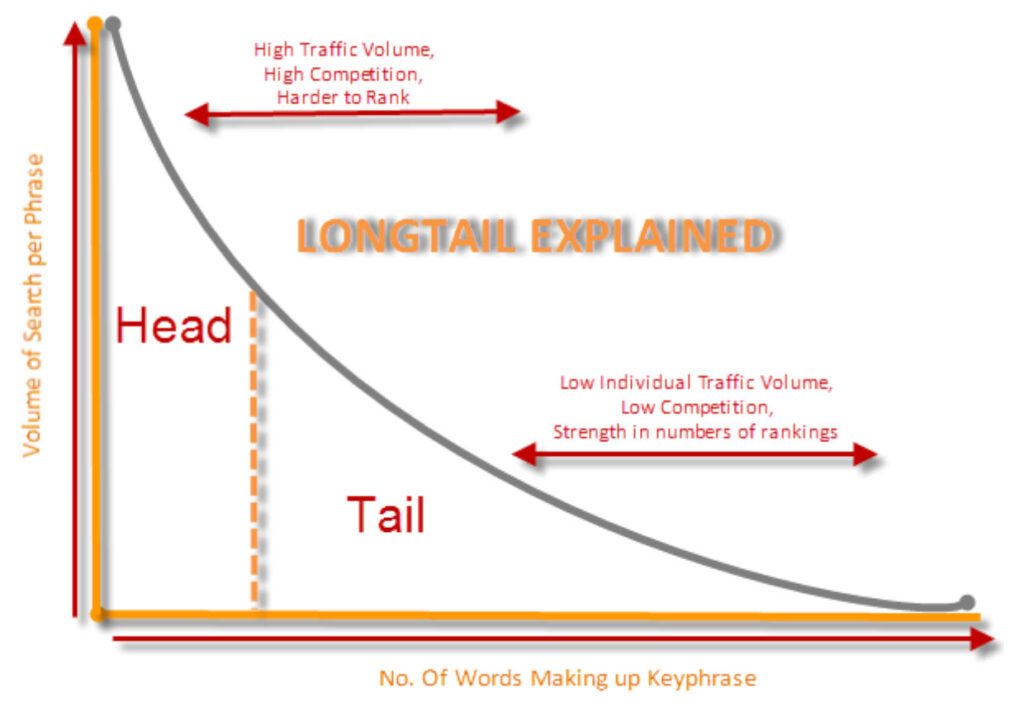

However, it is necessary to define the long tail and issues associated with it before going on to the technique itself.

What’s the most difficult part of SEO for the long tail? In my opinion there are two parts that make long tail strategies difficult:

1. Identifying Long Tail Keywords

By definition, long tail keywords make up the multitude of variations in any keyword target campaign. This means that there may be any number of strings attached to the original set of keywords / keyphrase to make up a number of sub sets, which could further branch off into sub-subsets. Most of these are independently low volume, but combined make a huge share of related traffic that sites ought to target. However, because of their low search volume, most of these keywords do not show up on most keyword tools.

Keyword Longtail Explained

How do you identify these? Or do you blindly pursue keyword variations by aptly stuffing your target pages with a number of variations that you can think off?

2. Identifying Ranking Opportunities Amongst Long Tail Keywords

So let’s say you have somehow compiled a list of X,000,000 Longtail Keyword variations. Well done. Awesome. Your keyword skills rock. But which ones should you try and target first? How easy is it to rank for these? As a rule, long tails tend to be easier to rank for, but assuredly, you won’t be the only person trying to get those rankings either.

How do you work out how quickly you can rank for KW X over KW Y without carrying out detailed SERP scraping exercises to work out some sort of value model in scale?

The Problem In Decision Making

So ideally you want to work on the easy ranking keywords first and then worry about the rest. Or you may want to work on the pot of higher combine traffic value long tails first. Either way you need the data that shows:

- Ease of Ranking

- Potential Traffic per LTK (Long Tail Keyword)

Neither one of these are easy to define, nor is there a ready guide where you can grab those numbers from. So how do you go about defining a detailed long tail strategy that is based on “real numbers” with regards to traffic and rankings?

The way to decide would obviously require real figures, real potential, in order to define priority. After all isnt it about profitability? Time is money and all that? Can you really waste time chasing after rankings that dont actually have traffic potential?

Show Me the Money

The Spammers Guide To SERP Sniffing

Warning: This is a HIGH risk strategy that may get you banned, and I don’t actually advise it. The following technique is for educational purposes only, and I do not condone Search Engine Spamming.

As I have discussed in my previous posts on Black Hat SEO, and SEO Automation, there are some industrial level methods to drive 1000’s of rankings fairly easily. This is easier when targeting the Long and Very Long Tail traffic, however the strategies aren’t sustainable as they are prone to creating “Burn Sites” which may gain short term rankings but not long term sustainability. This is because Google algo does recognize such sites and penalizes them, or “deprioritises” them in the SERPs.

However, short term rankings and traffic are great too. Not for sustainable businesses, but for research for sustainable businesses. Imagine if you raked in all the relevant data that these “burn” sites gave you? Then used them on legitimate sites? Thats what SERP Sniffing is.

Utilising Gray / Blackhat techniques to research SERP weaknesses so as to exploit them for Whitehat Purposes.

So How Does It Work?

Well to start off with, take your Sets of Two Word Phrases. Categorise them logically as you would in the absence of data, into their long tail targets.

In essence you could have:

Phrases:

- Blue Widgets

- Red Widgets

- Pink Widgets

Categories:

- Phrase + Location

- Phrase + Review

- Phrase + Buy

- Phrase + For Sale

- Cheap + Phrase

- Free + Phrase

- ETC ETC

Now you set up an automated site, where you pull in content in some real volume on the Categories and Sub Categories related to your Keyword Sets as identified above. Make sure you scale the operation such that the posts per category are coming in fast and hard once the site is indexed.

Use a “burn” link network to scale up back links (these only work in the short term and are also easily penalized).

Using your analytics, you should be able to identify keyword combinations that start driving traffic – in my experience such sites die out in periods between 2 – 14 days – and as a result you need to run daily exports of keywords and run ranking reports against those keywords.

Once the site has been burnt, you now have data:

- The Keywords that drove traffic

- Identified SERP positions for such keywords.

- Ease with which positions were garnered.

Real Example

I ran this experiment on a site that could in no way be linked to my main sites, keeping the domain reg, domain ownership, hosting etc all different to anything that could be linked back to me, either via the algo, or manual human review. The site is defunc and the niche which I ran it for is one I dont work in. This was purely an exercise in experimenting. I didnt try to monetise the site.

I picked a Keyword Set of 4 two word combinations, further broken down into 6 subsets, which made my total categores into 24.

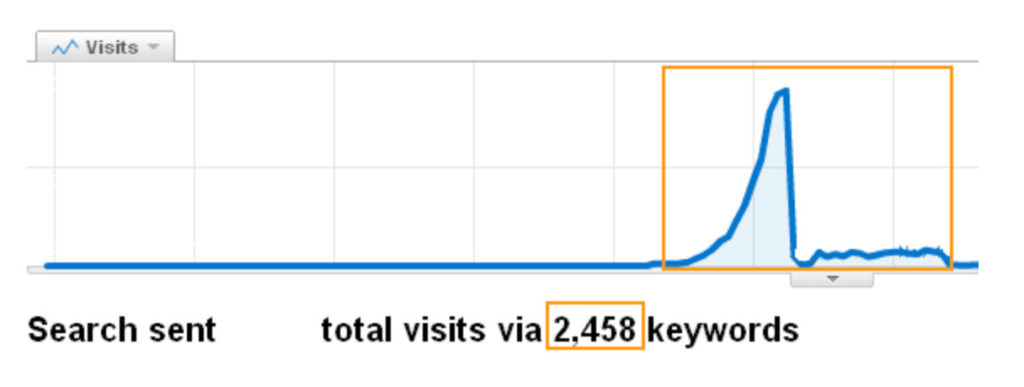

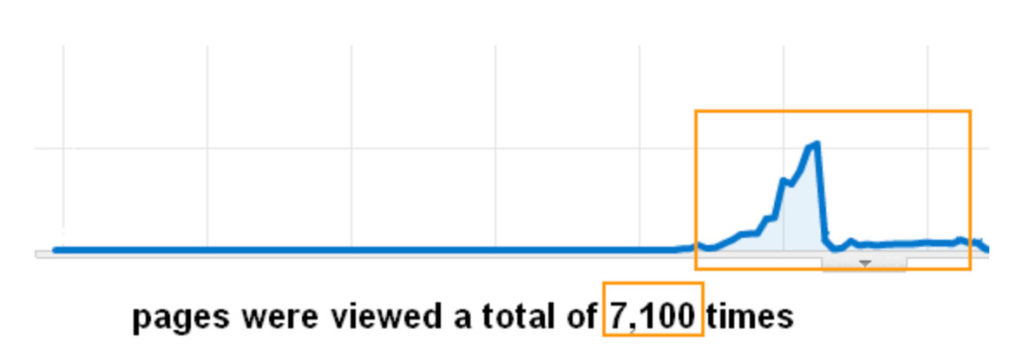

Site ran for a total of 15 days from indexation, and started bringing in traffic. See the Traffic Spike:

SERP Sniffing Traffic Spike

The strategy identified over 2,400 keywords that drove traffic to the site in the days it ranked.Think about this. I had an original target of 24 keywords (categories!). Automating these with random, yet related content multiplied the keywords into a data set of 2400. Thats nearly 100 variations per phrase!

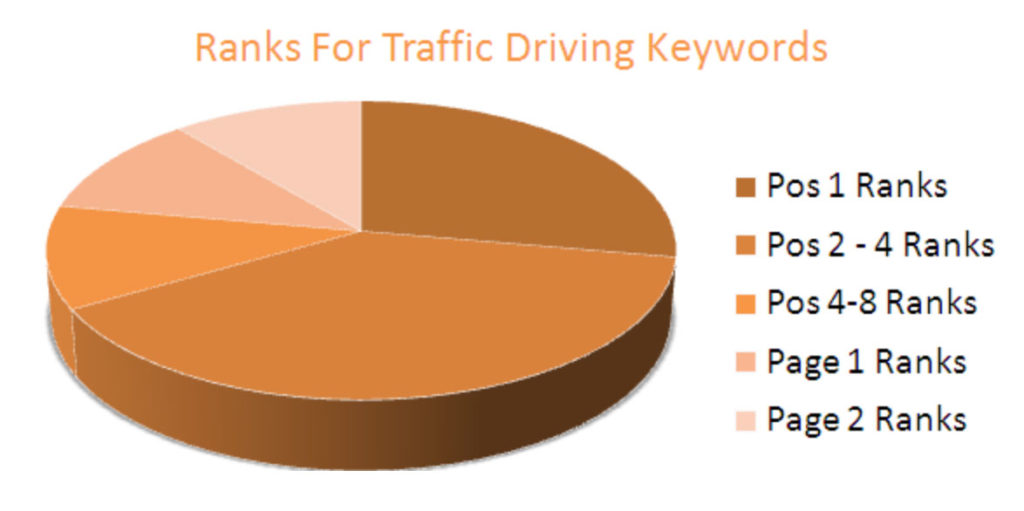

Keyword Traffic Rank Breakdown

Cross referencing these keywords vs the SERP rankings showed that over 90% of these rankings were on page one. So I have a pot of 2400 Keywords that drove traffic, with 60% in positions between 1 and 4 that drove traffic to my site in a space of 14 days.

Now dont assume that the traffic that comes in in this method is crap either – check out the page view stats:

Traffic Page Views

Assuming that this is the normal trend of traffic for this category, if I maintained those rankings on a legitimate site, with good quality content, for those target keywords, with pages dedicated to these LTKs, then I stand to gain [(7100/14)*365] 185,107 page views annually. ( I am not disclosing traffic data – sorry!)

Summary

As I demonstrated above, the technique does work. I dont advise it, for obvious reasons. However, compare YOUR Long Tail Keyword research vs this method – are you surprised that Spammers, Blackhats, Feed Affiliates can still profit from the SERPs? your data is based on intelligent, but guesswork. Their data is based on real opportunities. guess who prioritises limited resources better?